Mobile apps are easier to build than ever — but that doesn’t mean they’re safe.

Late last month, Tea, a buzzy app where women anonymously share reviews of men, suffered a data breach that exposed thousands of images and private messages.

As cybersecurity expert Michael Coates put it, the impact of Tea’s breach was that it exposed data “otherwise assumed to be private and sensitive” to anyone with the “technical acumen” to access that user data — and “ergo, the whole world.”

Tea confirmed that about 72,000 images — including women’s selfies and driver’s licenses — had been accessed. Images from the app were then posted to 4chan, and within days, that information spread across the web on platforms like X. Someone made a map identifying users’ locations, and a website where Tea users’ verification selfies were ranked side-by-side.

It wasn’t just images that were accessible. Kasra Rahjerdi, a security researcher, told Business Insider he was able to access more than 1.1 million private direct messages (DMs) between Tea’s users. Rahjerdi said those messages included “intimate” conversations about topics such as divorce, abortion, cheating, and rape.

The Tea breach was a crude reminder that just because we assume our data is private doesn’t mean it actually is — especially when it comes to new apps.

“Talking to an app is talking to a really gossipy coworker,” Rahjerdi said. “If you tell them anything, they’re going to share it, at least with the owners of the app, if not their advertisers, if not accidentally with the world.”

Isaac Evans, CEO of cybersecurity company Semgrep, said he uncovered an issue similar to the Tea breach when he was a student at MIT. A directory of students’ names and IDs was left open for the public to view.

“It’s just really easy, when you have a big bucket of data, to accidentally leave it out in the open,” Evans said.

But despite the risks, many people are willing to share sensitive information with new apps. In fact, even after news of the Tea data breach broke, the app continued to sit near the top of Apple’s App Store charts. On Monday, it was in the No. 4 slot on the chart behind only ChatGPT, Threads, and Google.

Tea declined to comment.

Cybersecurity in the AI era

The cybersecurity issues raised by the Tea app breach — namely that emerging apps can often be less secure and that people are willing to hand over very sensitive information to them — could get even worse in the era of AI.

Why? There are a few reasons.

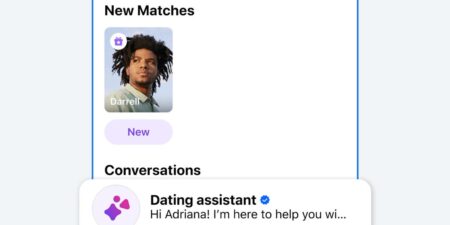

First, there’s the fact that people are getting more comfortable sharing sensitive information with apps, especially AI chatbots, whether that’s ChatGPT, Meta AI, or specialized chatbots trying to replicate therapy. This has already led to mishaps. Take Meta’s AI app’s “discover” feed, for example. In June, Business Insider reported that people were publicly sharing — seemingly accidentally — some quite personal exchanges with Meta’s AI chatbot.

Then there’s the rise of vibe coding, which security experts say could lead to dangerous app vulnerabilities.

Vibe coding, when people use generative AI to write and refine code, has been a favorite tech buzzword this year. Meanwhile, tech startups like Replit, Loveable, and Cursor have become highly valued vibe-coding darlings.

But as vibe coding becomes more mainstream — and potentially leads to a geyser of new apps — cybersecurity experts have concerns.

Brandon Evans, a senior instructor at the SANS Institute and cybersecurity consultant, told BI that vibe coding can “absolutely result in more insecure applications,” especially as people build quickly and take shortcuts.

(It’s worth noting that while some public discourse on social media around Tea’s breach includes criticisms of vibe coding, some security experts said they doubted the platform itself used AI to generate its code.)

“One of the big risks about vibe coding and AI-generated software is what if it doesn’t do security?” Coates said. “That’s what we’re all pretty concerned about.”

Rahjerdi told BI that the advent of vibe coding is what prompted him to start investigating “more and more projects recently.”

For Semgrep’s Evans, vibe coding itself isn’t the problem — it’s how it interacts with developers’ incentives more generally. Programmers often want to move fast, he said, speeding through the security review process.

“Vibe-coding means that a junior programmer can suddenly be inside a racecar, rather than a minivan,” he said.

But vibe coded or not, consumers should “actively think about what you’re sending to these organizations and really think about the worst case scenario,” the SANS Institute’s Evans said.

“Consumers need to understand that there will be more breaches, not just because applications are being developed faster and arguably worse, but also because the adversaries have AI on their side as well,” he added. “They can use AI to come up with new attacks to get this data too.”

Read the full article here