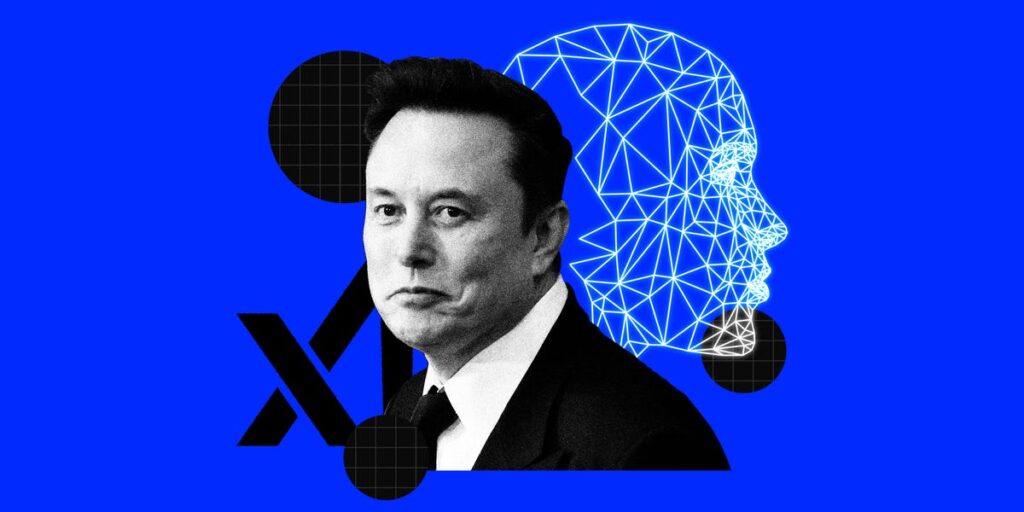

Workers at Elon Musk’s xAI have been asked to instill anti-“wokeness” in Grok and stop the chatbot from impersonating Musk. Recently, some were also asked to record their facial expressions to train the LLM — and they weren’t happy.

In April, more than 200 employees took part in an internal project called “Skippy,” which involved recording videos of themselves to help train the AI model to interpret human emotions.

Internal documents and Slack messages viewed by Business Insider show that the project left many workers uneasy, with some raising alarms about how their likenesses might be used. Others opted out entirely.

Over a weeklong period, AI tutors — the workers who help train Grok, the company’s large language model — were tasked with recording videos of themselves speaking to coworkers as well as making facial expressions, internal documents show.

The project was designed to train the company’s AI model to “recognize and analyze facial movements and expressions, such as how people talk, react to others’ conversations, and express themselves in various conditions,” according to one document.

The tutors were scheduled for 15- to 30-minute conversations with their coworkers. One person played the part of the “host” — the virtual assistant — and the other would take on the role of a user. The “host” minimized their movements and prioritized proper framing, while those playing the user could operate off a cellphone camera or computer and move freely in order to simulate a casual conversation with a friend.

It’s unclear whether that training data had any role in powering Rudi and Ani, two lifelike avatars that xAI released last week that were quickly shown stripping, flirting, and threatening to bomb banks.

The lead engineer on the project told workers during an introductory meeting that the project would help “give Grok a face,” according to a recording viewed by BI. The project lead said that the company might eventually use the data to build out “avatars of people.”

The project lead said xAI wanted imperfect data — background noise and sudden movements, for example — because the AI system would be more limited in its responses if it were trained solely on perfect video and audio feedback.

They told staff that the videos would not be distributed outside the company, and were solely for training purposes.

“Your face will not ever make it to production,” the engineer on the project told workers during the kick-off call. “It’s purely to teach Grok what a face is.”

The workers were given tips on how to have a successful one-on-one conversation, including avoiding one-word answers, asking follow-up questions, and maintaining eye contact. The company also supplied staff with a variety of conversation topics. Examples included: “How do you secretly manipulate people to get your way?”, “What about showers? Do you prefer morning or night?”, and “Would you ever date someone with a kid or kids?”

Before filming, workers were required to sign a consent form granting xAI “perpetual” access to the data, including the workers’ “likeness” for training and also for “inclusion in and promotion of commercial products and services offered by xAI.” The form specified the data would be used for training purposes and “not to create a digital version of you.”

Dozens of workers expressed concerns about the use of the data and the consent form, and several said they chose to opt out of the program, according to Slack messages viewed by BI.

“My general concern is if you’re able to use my likeness and give it that sublikeness, could my face be used to say something I never said?” one worker said during the introductory meeting.

A spokesperson for xAI did not respond to a request for comment.

In April, xAI launched a feature that allowed users to video chat with Grok.

On July 14, the company released its Ani and Rudi avatars, a few days after its larger Grok 4 release. The two animated characters respond to questions and commands. When they talk, their lips move and they make realistic gestures.

The female avatar, Ani, has had sexually explicit conversations with users and can be prompted to remove her clothing, videos posted by users on X show. The other avatar, a red panda named Rudi, can be prompted to make violent threats, including bombing banks and killing billionaires, user videos show.

Musk’s AI company posted a new job focused on developing avatars on July 15. Musk said on Wednesday the company is working on a Grok companion inspired by Edward Cullen from “Twilight” and Christian Grey from “50 Shades of Grey.”

On July 9, xAI’s chatbot sparked backlash after it went on an antisemitic rant. Workers within the company erupted over the posts, and xAI apologized for the chatbot’s behavior on X.

On July 12, the company released a Grok variant for Tesla owners and a $300-per-month subscription plan for a more sophisticated version of Grok, called SuperGrok Heavy

Do you work for xAI or have a tip? Contact this reporter via email at [email protected] or Signal at 248-894-6012. Use a personal email address, a nonwork device, and nonwork WiFi; here’s our guide to sharing information securely.

Read the full article here