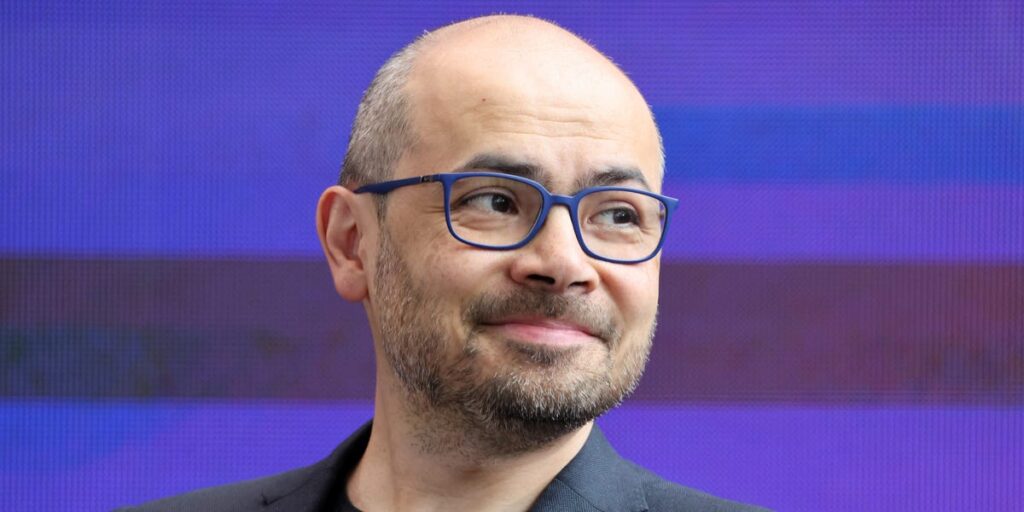

The one thing keeping AI from full AGI? Consistency, said Google DeepMind CEO Demis Hassabis.

Hassabis said on an episode of the “Google for Developers” podcast published Tuesday that advanced models like Google’s Gemini still stumble over problems most schoolkids could solve.

“It shouldn’t be that easy for the average person to just find a trivial flaw in the system,” he said.

He pointed to Gemini models enhanced with DeepThink — a reasoning-boosting technique — that can win gold medals at the International Mathematical Olympiad, the world’s most prestigious math competition.

But those same systems can “still make simple mistakes in high school maths,” he said, calling them “uneven intelligences” or “jagged intelligences.”

“Some dimensions, they’re really good; other dimensions, their weaknesses can be exposed quite easily,” he added.

Hassabis’s position aligns with Google CEO Sundar Pichai, who has dubbed the current stage of development “AJI” — artificial jagged intelligence. Pichai used this term on an episode of Lex Fridman’s podcast that aired in June to describe systems that excel in some areas but fail in others.

Hassabis said solving AI’s issues with inconsistency will take more than scaling up data and computing. “Some missing capabilities in reasoning and planning in memory” still need to be cracked, he added.

He said the industry also needs better testing and “new, harder benchmarks” to determine precisely what the models excel at, and what they don’t.

Hassabis and Google did not respond to a request for comment from Business Insider.

Big Tech hasn’t cracked AGI

Big Tech players like Google and OpenAI are working toward achieving AGI, a theoretical threshold where AI can reason like humans.

Hassabis said in April that AGI will arrive “in the next five to 10 years.”

AI systems remain prone to hallucinations, misinformation, and basic errors.

OpenAI CEO Sam Altman had a similar take ahead of last week’s launch of GPT-5. While calling his firm’s model a significant advancement, he told reporters it still falls short of true AGI.

“This is clearly a model that is generally intelligent, although I think in the way that most of us define AGI, we’re still missing something quite important, or many things quite important,” Altman said during a press call on Wednesday before the release of GPT-5.

Altman added that one of those missing elements is the model’s ability to learn independently.

“One big one is, you know, this is not a model that continuously learns as it’s deployed from the new things it finds, which is something that to me feels like AGI. But the level of intelligence here, the level of capability, it feels like a huge improvement,” he said.

Read the full article here