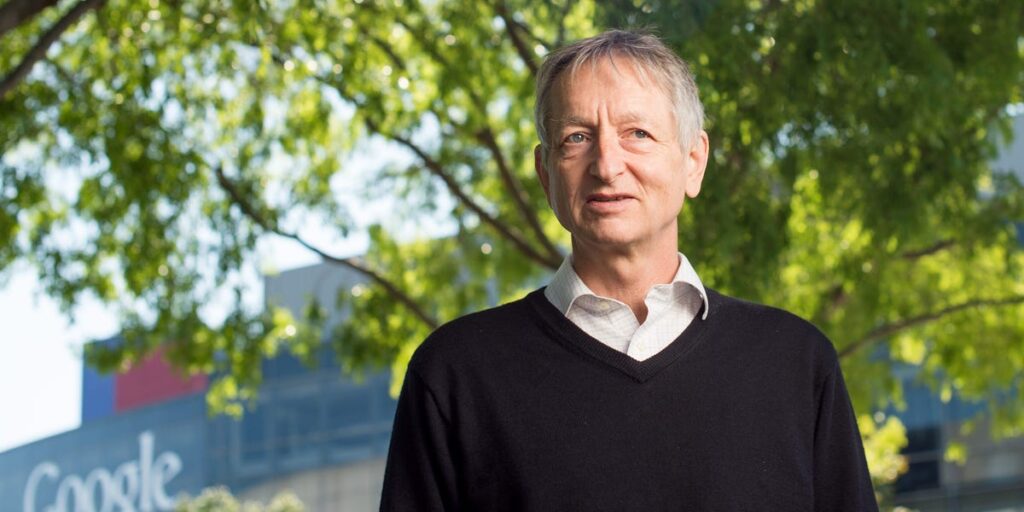

To hit on ideas that could eventually develop into breakthroughs, the “Godfather of AI,” Geoffrey Hinton, says you have to be “contrarian.”

“You have to have a deep belief that everybody else could be doing things wrong, and you could figure out how to do them right,” Hinton said in a recent interview with CBS. “And most people don’t believe that about themselves.”

Hinton was awarded the 2024 Nobel Prize in Physics for his work in machine learning and has previously warned of the possible existential risks of AI.

When asked about advice he’d give to the forthcoming generation of AI researchers, Hinton suggested searching for inefficiencies. Though ideas in this vein often lead to dead ends, if they pan out, he said, there’s a chance you’re hitting on something big.

“You should look for something where you figure out everybody’s doing it wrong and you think there’s a different way of doing it,” Hinton said. “And you should pursue that until you understand why you’re wrong. But just occasionally, that’s how you get good new ideas.”

“Intellectual self-confidence” can be inherent or acquired — in Hinton’s case, he said it was part nature, part nurture.

“My father was like that,” he said. “So that was a role model for being contrarian.”

Hinton said he spent years thinking up ways that existing systems could be challenged — and that he got it wrong far more often than he got it right.

“I spent decades having lots and lots of ideas about how to do things differently,” he said. “Nearly all of which were wrong, but just occasionally, they were right.”

Hinton did not immediately respond to a request for comment from Business Insider prior to publication.

Even now, Hinton still views himself as operating beyond the norm. He said that attitude is essential — if you’re not attached to existing methods of doing things, you’ll find it easier to challenge them.

“It requires you to think of yourself as an outsider,” he said. “I’ve always thought of myself as an outsider. I’m rather unhappy with the situation now where I’m a kind of insider. I’d rather be an outsider.”

Hinton, who told CBS he uses OpenAI’s GPT-4 and trusts it more than he should, has previously warned of the potential dangers of AI.

In a 2023 email to Business Insider, Hinton said humans should be “very concerned” about the rate of progress in AI development.

Hinton at the time estimated that it could be between five and 20 years before AI becomes a real threat, and even longer for the technology to become a threat to humanity — if it ever does.

“It is still possible that the threat will not materialize,” he previously told BI.

Read the full article here