subscribers. Become an Insider

and start reading now.

Have an account? .

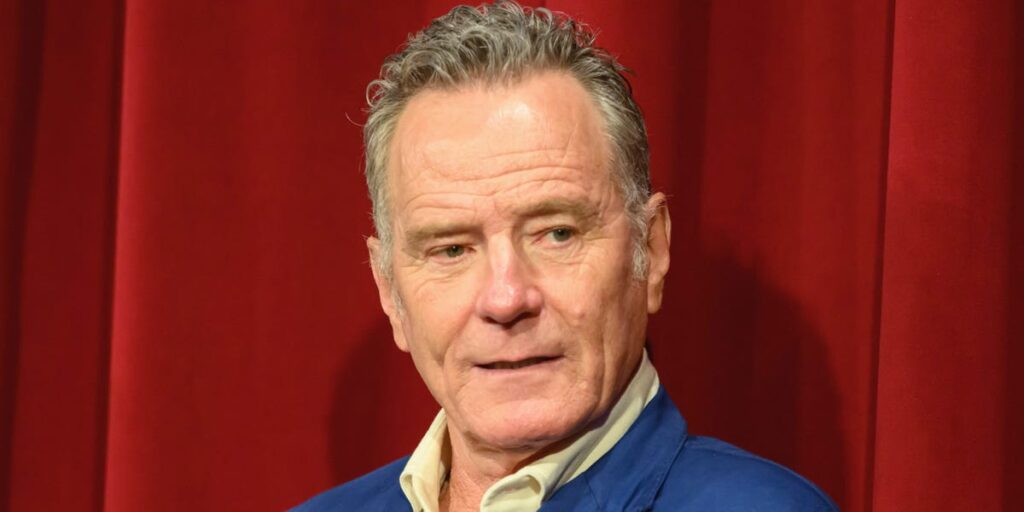

- OpenAI is working with Bryan Cranston and Hollywood groups to limit deepfakes on Sora 2.

- Cranston raised concerns to SAG-AFTRA after his likeness was replicated without his consent.

- OpenAI said its improved guardrails around the replication of an invidiual’s voice and likeness.

OpenAI is working with actor Bryan Cranston and other Hollywood groups to limit deepfakes made with its Sora 2 video app, the company said Monday in a joint statement.

The “Breaking Bad” actor voiced concerns to SAG-AFTRA after his voice and likeness were replicated in the video generator, following its invite-only launch this fall.

“I was deeply concerned not just for myself, but for all performers whose work and identity can be misused in this way,” Cranston said in the statement. The statement came from OpenAI, Cranston, SAG-AFTRA, United Talent Agency, Creative Artists Agency, and the Association of Talent Agents.

OpenAI said its policy was intended to require people to opt-in in order for Sora to use their name and likeness and that it “expressed regret for these unintentional generations.”

“OpenAl has strengthened guardrails around replication of voice and likeness when individuals do not opt-in,” the statement said.

The company said it has an opt-in policy for the use of any individual’s voice and likeness, and that all artists have the right to determine how and when they are simulated. It also said its committed to responding quickly to any complaints.

The statement said OpenAI’s policy aligns with the NO FAKES Act, proposed federal legislation intended to protect individuals’ voice and likeness from AI generations.

Since launching Sora 2, OpenAI has already announced changes that would give more power to intellectual property holders following backlash and copyright concerns.

Last week, OpenAI announced it would pause AI-generated videos of Martin Luther King Jr. on Sora after the civil rights leader’s estate raised concerns with the company.

Read the full article here