AGI is a pretty silly debate. It’s only really important in one way: It governs how the world’s most important AI partnership will change in the coming months. That’s the deal between OpenAI and Microsoft.

This is the situation right now: Until OpenAI achieves Artificial General Intelligence — where AI capabilities surpass those of humans — Microsoft gets a lot of valuable technological and financial benefits from the startup. For instance, OpenAI must share a significant portion of its revenue with Microsoft. That’s billions of dollars.

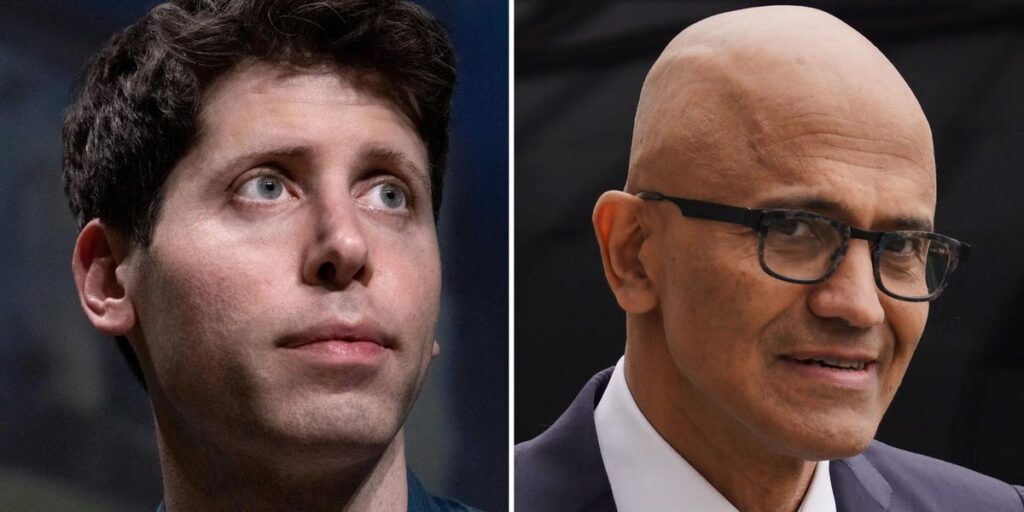

One could reasonably argue that this might be why Sam Altman bangs on about OpenAI getting close to AGI soon.

Many other experts in the AI field don’t talk about this much, or they think the AGI debate is off base in various ways, or just not that important. Even Anthropic CEO Dario Amodei, one of the biggest AI boosters on the planet, doesn’t like to talk about AGI.

Microsoft CEO Satya Nadella sees things very differently. Wouldn’t you? If another company is contractually required to give you oodles of money if they don’t reach AGI, then you’re probably not going to think we’re close to AGI!

Nadella has called the push toward AGI “benchmark hacking,” which is so delicious. This refers to AI researchers and labs designing AI models to perform well on wonky industry benchmarks, rather than in real life.

Here’s OpenAI’s official definition of AGI: “highly autonomous systems that outperform humans at most economically valuable work.”

Other experts have defined it slightly differently. But the main point is that AI machines and software must be better than humans at a wide variety of useful tasks. You can already train an AI model to be better at one or two specific things, but to get to artificial general intelligence, machines must be able to do many different things better than humans.

My real-world AGI tests

Over the past few months, I’ve devised several real-world tests to see if we’ve reached AGI. These are fun or annoying everyday things that should just work in a world of AGI, but they don’t right now for me. I also canvassed input from readers of my Tech Memo newsletter and tapped my source network for fun suggestions.

Here are my real-world tests that will prove we’ve reached AGI:

- The PR departments of OpenAI and Anthropic use their own AI technology to answer every journalist’s question. Right now, these companies are hiring a ton of human journalists and other communications experts to handle a barrage of reporter questions about AI and the future. When I reach out to these companies, humans answer every time. Unacceptable! Unless this changes, we’re not at AGI.

- This suggestion is from a hedge fund contact, and I love it: Please, please can my Microsoft Outlook email system stop burying important emails while still letting spam through? This one seems like something Microsoft and OpenAI could solve with their AI technology. I haven’t seen a fix yet.

- In a similar vein, can someone please stop Cactus Warehouse from texting me every 2 days with offers for 20% off succulents? I only bought one cactus from you guys once! Come on, AI, this can surely be solved!

- My 2024 Tesla Model 3 Performance hits potholes in FSD. No wonder tires have to be replaced so often on these EVs. As a human, I can avoid potholes much better. Elon, the AGI gauntlet has been thrown down. Get on this now.

- Can AI models and chatbots make valuable predictions about the future, or do they mostly just regurgitate what’s already known on the internet? I tested this recently, right after the US bombed Iran. ChatGPT’s stock-picking ability was put to the test versus a single human analyst. Check out the results here. TL;DR: We are nowhere near AGI on this one.

- There’s a great Google Gemini TV ad where a kid is helping his dad assemble a basketball net. The son is using an Android phone to ask Gemini for the instructions and pointing the camera at his poor father struggling with parts and tools. It’s really impressive to watch as Gemini finds the instruction manual online just by “seeing” what’s going on live with the product assembly. For AGI to be here, though, the AI needs to just build the damn net itself. I can sit there and read out instructions in an annoying way, while someone else toils with fiddly assembly tasks — we can all do that.

Yes, I know these tests seem a bit silly — but AI benchmarks are not the real world, and they can be pretty easily gamed.

That last basketball net test is particularly telling for me. Getting an AI system and software to actually assemble a basketball net — that might happen sometime soon. But, getting the same system to do a lot of other physical-world manipulation stuff better than humans, too? Very hard and probably not possible for a very long time.

As OpenAI and Microsoft try to resolve their differences, the companies can tap experts to weigh in on whether the startup has reached AGI or not, per the terms of their existing contract, according to The Information. I’m happy to be an expert advisor here. Sam and Satya, let me know if you want help!

For now, I’ll leave the final words to a real AI expert. Konstantin Mishchenko, an AI research scientist at Meta, recently tweeted this, while citing a blog by another respected expert in the field, Sergey Levine:

“While LLMs learned to mimic intelligence from internet data, they never had to actually live and acquire that intelligence directly. They lack the core algorithm for learning from experience. They need a human to do that work for them,” Mishchenko wrote, referring to AI models known as large language models.

“This suggests, at least to me, that the gap between LLMs and genuine intelligence might be wider than we think. Despite all the talk about AGI either being already here or coming next year, I can’t shake off the feeling it’s not possible until we come up with something better than a language model mimicking our own idea of how an AI should look,” he concluded.

Sign up for BI’s Tech Memo newsletter here. Reach out to me via email at abarr@businessinsider.com.

Read the full article here